Blitz News Digest

Stay updated with the latest trends and insights.

Machine Learning: When Robots Start Making Better Decisions Than Humans

Discover how machine learning empowers robots to outsmart humans in decision-making. Are we ready to trust their choices? Find out!

The Future of Decision-Making: How Machine Learning Outpaces Human Judgment

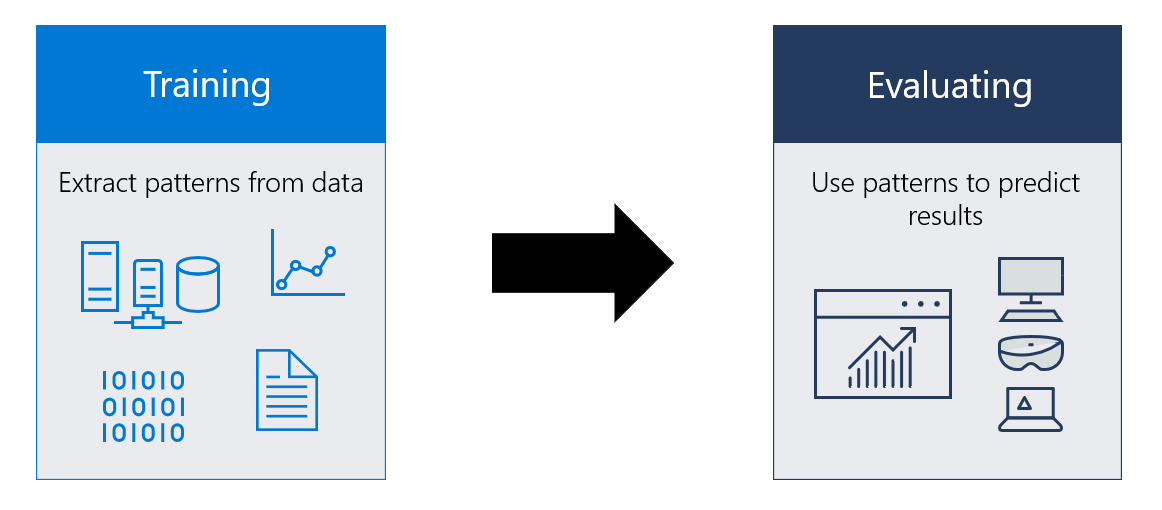

The landscape of decision-making is rapidly evolving, as machine learning continues to advance beyond traditional human judgment. Unlike humans, who often rely on intuition and experience, machine learning algorithms analyze vast amounts of data to identify patterns and make predictions. This capability enables organizations to make informed decisions more swiftly, ultimately leading to enhanced efficiency and productivity. For instance, businesses can now leverage predictive analytics to optimize inventory management, enabling them to forecast demand accurately and reduce waste.

As the technology matures, the role of machine learning in decision-making processes will only expand. Companies are increasingly adopting AI-driven platforms to assist with critical choices in various sectors, from finance to healthcare. These systems not only reduce the risk of human error but also provide insights that humans might overlook. In the near future, we can expect to see a hybrid approach where machine learning tools complement human decision-makers, creating a collaborative environment that leverages the strengths of both for superior outcomes.

Exploring the Ethics of AI: What Happens When Robots Make Better Choices?

The rapid advancement of artificial intelligence has sparked a crucial conversation about ethics in AI. As AI systems increasingly take on decision-making roles, we must ask ourselves: what happens when robots make better choices than humans? For instance, in areas like healthcare, AI algorithms can analyze vast amounts of data to recommend treatments that may surpass human intuition. However, this raises ethical questions about accountability, transparency, and bias, especially if the algorithms are based on flawed or incomplete datasets. Striking a balance between leveraging AI for improved outcomes and ensuring ethical standards is essential in shaping a responsible AI future.

Moreover, the implications of AI decision-making extend beyond healthcare to other sectors such as finance, law enforcement, and even social interactions. The use of AI in these areas can lead to efficiencies and optimized processes, but it also poses risks, such as perpetuating existing biases or making decisions without human empathy. As we explore the ethics of AI, it is imperative to establish frameworks that prioritize fairness and accountability. This way, we can harness the benefits of AI while remaining vigilant against the potential consequences of allowing machines to dictate outcomes in our lives.

Can Machines Truly Understand Context? A Deep Dive into Decision-Making Algorithms

The question of whether machines can truly understand context has become increasingly relevant as decision-making algorithms continue to evolve. Traditional rule-based systems were limited by their inability to process nuances and subtleties in human language and behavior. However, with the advent of artificial intelligence and machine learning, algorithms like natural language processing (NLP) have improved the capacity of machines to interpret context. For instance, algorithms can now analyze various forms of data, including text, speech, and images, allowing for more sophisticated decision-making based on contextual clues.

Despite these advances, significant challenges remain in achieving genuine contextual understanding. While AI can make predictions and decisions based on patterns in data, contextual awareness often requires a depth of understanding that current algorithms struggle to achieve. Factors such as emotional intelligence, cultural nuances, and historical context play a critical role in human decision-making but are difficult to quantify and encode in models. Therefore, as we continue to refine these algorithms, it is essential to explore their limitations and the potential implications for fields ranging from customer service to autonomous vehicles.